Kathy Sierra was nice enough to send me an email asking me some thoughts on audio/sound. I sometimes need this impetus to write down even the briefest thoughts on a subject (and these are just brief sketches). The following are her questions and my answers.

Do you agree with me that the power of audio/sound is being greatly overlooked in so many areas of product design, user experience, etc. (as opposed to areas where sound is recognized as crucial, like movies and commercials)?

Yes I agree but there is a good reason – I would also extend your characterization of crucial to include games and toys.

Movies and commercials are passive shared experiences. Task based products are interactive and not generally shared. It’s an obvious but crucial difference. Everyone outside of China may agree that noise is something that we would rather not experience. But sound is not noise.

Sound is distinguished from noise by the simple fact that sound can provide information.

Sound answers questions; sound supports activities for tasks, so sound is inheritly useful. Consider the information provided by the click when the bolt on a door slides open, the sound of your zipper when you close a pair of pants, the whistle of a kettle when your water is finished boiling, the sound of a river moving in the distance, the sound of liquid boiling, of food frying, and the sounds of people talking in the distance. In the workplace there are the sounds of keys being pressed on a computer keyboard.

Natural sound is as essential as visual information because sound tells us about things we can’t see and it does so while our eyes are occupied elsewhere. Natural sounds reflect the complex interaction of natural objects; the way one part moves against another, the material of which the parts are made. Sounds are generated when materials interact and the sound tells us whether they are hitting, sliding, breaking or bouncing. Sounds differ according to the characteristics of the objects and they differ on how fast things are going and how far they are from us.

An extension of the statement that tasks are not shared is that the environment in which the tasks are competed are – one person’s sound is another noise. Visual displays are not as intrusive as auditory ones.

So the question of whether or not auditory interfaces would or should be used is primarily a question of implementation – how to restrict the receiving of the information inherent to sound to the person meant to be receiving it? When we solve this problem cheaply then I think we will see a great deal more use of sound in other products’ development.

Do you see any areas of great leverage — places where audio/sound could be incorporated that could make a big difference in either usability, user experience (even if simply for more *pleasure* in the experience)?

I hesitate to use these buzz words but with the popularization of Ajax/Web 2.0 interfaces it may be a good time for people to start experimenting further with sound in online application interfaces. Since these interfaces load data in real time, we lose a vital visual clue from the pages loading or refreshing. Sometimes the data change happens so fast we can’t follow any clues.

But these ideas are always met with criticism. An example from Jeffery Veen, “Sounds I stopped counting how many times I tore the headphones from my ears when a site started blaring music or “interaction” cues like pops, whistles, or explosions whenever I moused over something. Am I the only one who listens to music while using my computer?”

I love childrens toys and gain much inspiration from them. Cheap cheap sensors which illicite wonderfully fun feedback. We should have these in everything. Imagine buying a jacket that when you closed the snaps it sounded “heavier” than it feels or looks. Like the difference in sound between the door closing on a Lada and a Benz. Lots of possibilities.

Any other comments on your “Adult Chair” experience? What you learned from observing users interacting, etc.?

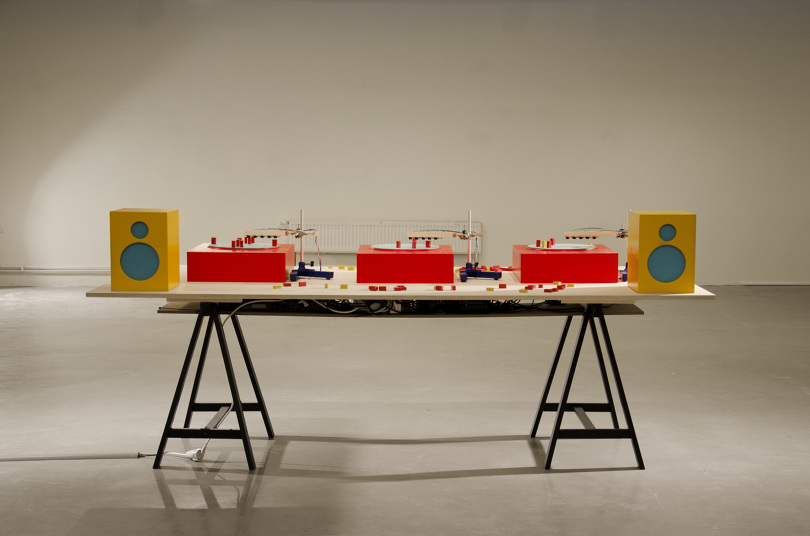

The adult chairs were just a small part of a broader set of objectives in creating non-elitist interfaces to musical expression. Though all of my work at that time were prototypes, just some manifestations of some ideas I had, I was harshly criticized because of the lack of “new science” or extended interactivity. Basically my work was too simple due to using off the shelf tech. and short lengths of time that people were engaged in the activities. I rejected this criticism, mostly, because I knew the criticizers didn’t understand the goals of the project and they weren’t looking at people actually using the prototypes. Though it was never intended to be so, this project ended up being the greatest champion of user centered design for me personally. We video taped allot of sessions and gathered allot of anecdotal data which drove later iterations of the design.

Some of the conclusions:

- Its really hard to design interfaces that have no visual responses. In a game we developed around an interface similar to Adult Chairs (hulabaloo) children kept looking for flashing lights or some kind of physical response. Eventually they learned to use their ears only which was good as it was a music appreciation game. Children here are very conditioned to visual response.

- People love being surprised and they want to have fun. They don’t care if the technology came from radio shack – they care if you can make them smile.

- Features, options, and controls are not needed to allow people to have fun for a short period of time. To keep them engaged for long periods of time people want that control.

Any other thoughts or tips for the rest of us?

I think too often when people thing of audio interfaces they immediately think of the horrible implementations in Yahoo IM, icq, and flash sites with hip hop sound tracks. It can be intelligently and elegantly designed.

Another thought is the difficulty in designing “gray sound”. Computer user interfaces are gray – not thought provoking – sit in the background and purposely boring. Icons and language localizations aside I think they work everywhere. But how to design auditory signals that work everywhere – cultural differences abound and what data is there to help us?

I live and work in Taiwan, arguably the noisiest group of people anywhere (i’m guessing). They “appear” to have a tolerance for noise and a need for sound that is far different than my own. Because their environment is so full of aural cues how do we design for them? A Japanese garden is a place of tranquility. A Canadian park a place of clean nature. A Taiwanese park is frequently experienced with a soundtrack as they pump in music and nature sounds to keep it from becoming quiet. Quiet seems to make them uncomfortable. This is just one example of what is acceptable or normal for levels of aural cues across 3 different locations and cultures. I think localizing audio interfaces will be quite challenging.